Persist the donor to DynamoDB

On the donor sign-up page we saw how to build and deploy a simple AWS Lambda function using Chalice that parses the JSON payload and returns it to the caller. On this page we are going to take that JSON payload and save it to a database.

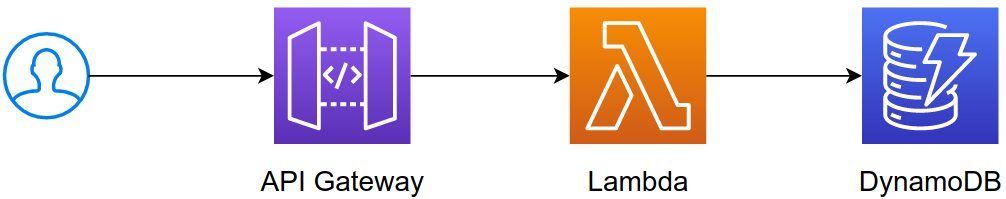

Looking at it from the architecture standpoint, this is what we want to achieve:

If we transformed it into steps, they would look like so:

- A user sends an HTTP

POSTrequest with a JSON payload - API Gateway receives it and invokes the Lambda function

- Lambda function receives the JSON payload in the

eventargument and saves it to a database (with or without changes)

What we have so far?

If you have been following along, your ~/serverless_workshop/savealife/app.py should have the following contents:

import logging

from os import getenv

from chalice import Chalice

try:

from dotenv import load_dotenv

load_dotenv()

except ImportError:

pass

first_name = getenv("WORKSHOP_NAME", "ivica") # replace with your own name of course

app = Chalice(app_name=f"{first_name}-savealife")

app.log.setLevel(logging.DEBUG)

@app.route("/donor/signup", methods=["POST"])

def donor_signup():

body = app.current_request.json_body

app.log.debug(f"Received JSON payload: {body}")

app.log.info("This is a INFO level message")

return body

Persisting the JSON payload to a DynamoDB table

DynamoDB provides an HTTP API to perform actions - there are no persistent TCP connections, pools or anything similar. Want to save an item? Sure, there’s a PutItem API request that you can make. We of course will not be simple caveman making raw API requests. We will be using the very powerful AWS SDK for Python called Boto.

Your requirements.txt file should have boto3 in it.

cat requirements.txt

will result in output similar to:

Fun fact: Do you have an idea why is it called “boto”? Click here to find out.

Chalice multifile support

Before we proceed any further, a little digression. Chalice as a framework allows us to ship

arbitrary assets (JSON files, additional Python files etc.) with

our Lambda function. Whatever files are placed in the chalicelib directory will be recursively included in the deployment.

The chalicelib/ folder is to be located on the same level as your app.py:

We can leverage this functionality to split up our application into multiple files as it grows. It is the perfect place to put our DynamoDB related code into.

DynamoDB code

Add a file called db.py to ~/serverless_workshop/savealife/chalicelib/ with the following contents:

import logging

from os import getenv

import boto3

try:

from dotenv import load_dotenv

load_dotenv()

except ImportError:

pass

ENV = getenv("ENV", "dev")

first_name = getenv("WORKSHOP_NAME", "ivica") # replace with your own name of course

logger = logging.getLogger(f"{first_name}-savealife")

_DB = None

TABLE_NAME = getenv("TABLE_NAME")

def get_app_db():

global _DB

if _DB is None:

_DB = SavealifeDB(

table=boto3.resource("dynamodb").Table(TABLE_NAME), logger=logger

)

return _DB

class SavealifeDB:

def __init__(self, table, logger):

self._table = table

self._logger = logger

def donor_signup(self, donor_dict):

try:

self._table.put_item(

Item={

"first_name": donor_dict.get("first_name"),

"city": donor_dict.get("city"),

"type": donor_dict.get("type"),

"email": donor_dict.get("email"),

}

)

self._logger.debug(

f"Inserted donor '{donor_dict.get('email')}' into DynamoDB table '{self._table}'"

)

return True

except Exception as exc:

self._logger.exception(exc)

Let’s look at the SavealifeDB class. The donor_signup method is what interacts with the DynamoDB HTTP API.

Our table resource has a put_item() function which we use to… yeah, put an item into the table. The item itself

is a just a dictionary.

App code changes

Our application code from app.py will be a bit bulkier since we added logging on the logging page.

It looks like this:

import logging

from os import getenv

from chalice import Chalice

from chalicelib.db import get_app_db

try:

from dotenv import load_dotenv

load_dotenv()

except ImportError:

pass

first_name = getenv("WORKSHOP_NAME", "ivica") # replace with your own name of course

app = Chalice(app_name=f"{first_name}-savealife")

app.log.setLevel(logging.DEBUG)

@app.route("/donor/signup", methods=["POST"])

def donor_signup():

body = app.current_request.json_body

app.log.debug(f"Received JSON payload: {body}")

return get_app_db().donor_signup(body)With the first highlighted line we import the get_app_db function that is the interface for interacting with DynamoDB. Changing the return statement will break our tests but that’s all right.

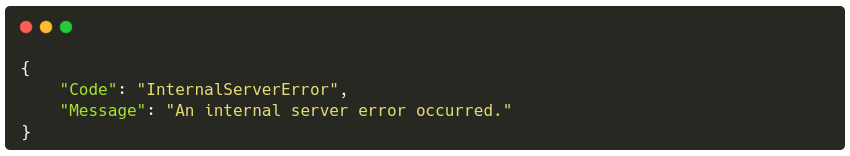

Invoke the local endpoint with http :8000/donor/signup first_name=joe and you will get a result similar to this:

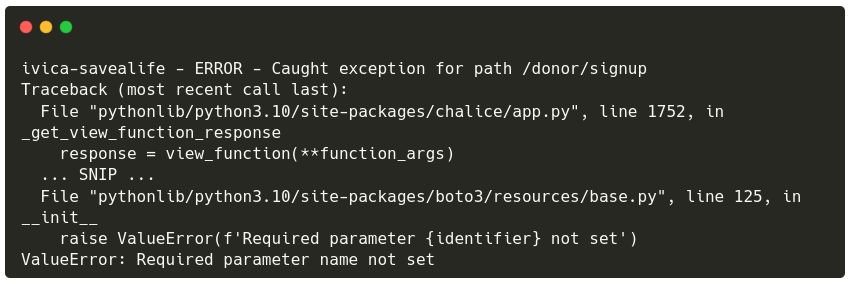

We can also see that the chalice local shows a stacktrace because an error happened:

Formatting the message in a different way, ValueError: Required parameter 'name' not set for example,

would make it much more clear: a required parameter name is not set. In our case, name of the DynamoDB table is

not set.